audio-ldm

Maintainer: haoheliu

32

| Property | Value |

|---|---|

| Model Link | View on Replicate |

| API Spec | View on Replicate |

| Github Link | View on Github |

| Paper Link | View on Arxiv |

Get summaries of the top AI models delivered straight to your inbox:

Model overview

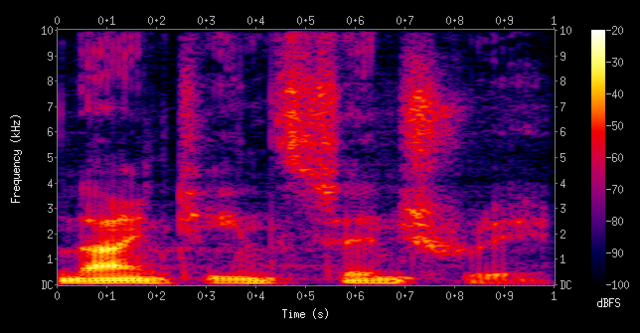

audio-ldm is a text-to-audio generation model created by Haohe Liu, a researcher at CVSSP. It uses latent diffusion models to generate audio based on text prompts. The model is similar to stable-diffusion, a widely-used latent text-to-image diffusion model, but applied to the audio domain. It is also related to models like riffusion, which generates music from text, and whisperx, which transcribes audio. However, audio-ldm is focused specifically on generating a wide range of audio content from text.

Model inputs and outputs

The audio-ldm model takes in a text prompt as input and generates an audio clip as output. The text prompt can describe the desired sound, such as "a hammer hitting a wooden surface" or "children singing". The model then produces an audio clip that matches the text prompt.

Inputs

- Text: A text prompt describing the desired audio to generate.

- Duration: The duration of the generated audio clip in seconds. Higher durations may lead to out-of-memory errors.

- Random Seed: An optional random seed to control the randomness of the generation.

- N Candidates: The number of candidate audio clips to generate, with the best one selected.

- Guidance Scale: A parameter that controls the balance between audio quality and diversity. Higher values lead to better quality but less diversity.

Outputs

- Audio Clip: The generated audio clip that matches the input text prompt.

Capabilities

audio-ldm is capable of generating a wide variety of audio content from text prompts, including speech, sound effects, music, and beyond. It can also perform audio-to-audio generation, where it generates a new audio clip that has similar sound events to a provided input audio. Additionally, the model supports text-guided audio-to-audio style transfer, where it can transfer the sound of an input audio clip to match a text description.

What can I use it for?

audio-ldm could be useful for various applications, such as:

- Creative content generation: Generating audio content for use in videos, games, or other multimedia projects.

- Audio post-production: Automating the creation of sound effects or music to complement visual content.

- Accessibility: Generating audio descriptions for visually impaired users.

- Education and research: Exploring the capabilities of text-to-audio generation models.

Things to try

When using audio-ldm, try providing more detailed and descriptive text prompts to get better quality results. Experiment with different random seeds to see how they affect the generation. You can also try combining audio-ldm with other audio tools and techniques, such as audio editing or signal processing, to create even more interesting and compelling audio content.

This summary was produced with help from an AI and may contain inaccuracies - check out the links to read the original source documents!

Related Models

stable-diffusion

107.9K

Stable Diffusion is a latent text-to-image diffusion model capable of generating photo-realistic images given any text input. Developed by Stability AI, it is an impressive AI model that can create stunning visuals from simple text prompts. The model has several versions, with each newer version being trained for longer and producing higher-quality images than the previous ones. The main advantage of Stable Diffusion is its ability to generate highly detailed and realistic images from a wide range of textual descriptions. This makes it a powerful tool for creative applications, allowing users to visualize their ideas and concepts in a photorealistic way. The model has been trained on a large and diverse dataset, enabling it to handle a broad spectrum of subjects and styles. Model inputs and outputs Inputs Prompt**: The text prompt that describes the desired image. This can be a simple description or a more detailed, creative prompt. Seed**: An optional random seed value to control the randomness of the image generation process. Width and Height**: The desired dimensions of the generated image, which must be multiples of 64. Scheduler**: The algorithm used to generate the image, with options like DPMSolverMultistep. Num Outputs**: The number of images to generate (up to 4). Guidance Scale**: The scale for classifier-free guidance, which controls the trade-off between image quality and faithfulness to the input prompt. Negative Prompt**: Text that specifies things the model should avoid including in the generated image. Num Inference Steps**: The number of denoising steps to perform during the image generation process. Outputs Array of image URLs**: The generated images are returned as an array of URLs pointing to the created images. Capabilities Stable Diffusion is capable of generating a wide variety of photorealistic images from text prompts. It can create images of people, animals, landscapes, architecture, and more, with a high level of detail and accuracy. The model is particularly skilled at rendering complex scenes and capturing the essence of the input prompt. One of the key strengths of Stable Diffusion is its ability to handle diverse prompts, from simple descriptions to more creative and imaginative ideas. The model can generate images of fantastical creatures, surreal landscapes, and even abstract concepts with impressive results. What can I use it for? Stable Diffusion can be used for a variety of creative applications, such as: Visualizing ideas and concepts for art, design, or storytelling Generating images for use in marketing, advertising, or social media Aiding in the development of games, movies, or other visual media Exploring and experimenting with new ideas and artistic styles The model's versatility and high-quality output make it a valuable tool for anyone looking to bring their ideas to life through visual art. By combining the power of AI with human creativity, Stable Diffusion opens up new possibilities for visual expression and innovation. Things to try One interesting aspect of Stable Diffusion is its ability to generate images with a high level of detail and realism. Users can experiment with prompts that combine specific elements, such as "a steam-powered robot exploring a lush, alien jungle," to see how the model handles complex and imaginative scenes. Additionally, the model's support for different image sizes and resolutions allows users to explore the limits of its capabilities. By generating images at various scales, users can see how the model handles the level of detail and complexity required for different use cases, such as high-resolution artwork or smaller social media graphics. Overall, Stable Diffusion is a powerful and versatile AI model that offers endless possibilities for creative expression and exploration. By experimenting with different prompts, settings, and output formats, users can unlock the full potential of this cutting-edge text-to-image technology.

Updated Invalid Date

tango

18

Tango is a latent diffusion model (LDM) for text-to-audio (TTA) generation, capable of generating realistic audios including human sounds, animal sounds, natural and artificial sounds, and sound effects from textual prompts. It uses the frozen instruction-tuned language model Flan-T5 as the text encoder and trains a UNet-based diffusion model for audio generation. Compared to current state-of-the-art TTA models, Tango performs comparably across both objective and subjective metrics, despite training on a dataset 63 times smaller. The maintainer has released the model, training, and inference code for the research community. Tango 2 is a follow-up to Tango, built upon the same foundation but with additional alignment training using Direct Preference Optimization (DPO) on the Audio-alpaca dataset, a pairwise text-to-audio preference dataset. This helps Tango 2 generate higher-quality and more aligned audio outputs. Model inputs and outputs Inputs Prompt**: A textual description of the desired audio to be generated. Steps**: The number of steps to use for the diffusion-based audio generation process, with more steps typically producing higher-quality results at the cost of longer inference time. Guidance**: The guidance scale, which controls the trade-off between sample quality and sample diversity during the audio generation process. Outputs Audio**: The generated audio clip corresponding to the input prompt, in WAV format. Capabilities Tango and Tango 2 can generate a wide variety of realistic audio clips, including human sounds, animal sounds, natural and artificial sounds, and sound effects. For example, they can generate sounds of an audience cheering and clapping, rolling thunder with lightning strikes, or a car engine revving. What can I use it for? The Tango and Tango 2 models can be used for a variety of applications, such as: Audio content creation**: Generating audio clips for videos, games, podcasts, and other multimedia projects. Sound design**: Creating custom sound effects for various applications. Music composition**: Generating musical elements or accompaniment for songwriting and composition. Accessibility**: Generating audio descriptions for visually impaired users. Things to try You can try generating various types of audio clips by providing different prompts to the Tango and Tango 2 models, such as: Everyday sounds (e.g., a dog barking, water flowing, a car engine revving) Natural phenomena (e.g., thunderstorms, wind, rain) Musical instruments and soundscapes (e.g., a piano playing, a symphony orchestra) Human vocalizations (e.g., laughter, cheering, singing) Ambient and abstract sounds (e.g., a futuristic machine, alien landscapes) Experiment with the number of steps and guidance scale to find the right balance between sample quality and generation time for your specific use case.

Updated Invalid Date

audiogen

35

audiogen is a model developed by Sepal that can generate sounds from text prompts. It is similar to other audio-related models like musicgen from Meta, which generates music from prompts, and styletts2 from Adirik, which generates speech from text. audiogen can be used to create a wide variety of sounds, from ambient noise to sound effects, based on the text prompt provided. Model inputs and outputs audiogen takes a text prompt as the main input, along with several optional parameters to control the output, such as duration, temperature, and output format. The model then generates an audio file in the specified format that represents the sounds described by the prompt. Inputs Prompt**: A text description of the sounds to be generated Duration**: The maximum duration of the generated audio (in seconds) Temperature**: Controls the "conservativeness" of the sampling process, with higher values producing more diverse outputs Classifier Free Guidance**: Increases the influence of the input prompt on the output Output Format**: The desired output format for the generated audio (e.g., WAV) Outputs Audio File**: The generated audio file in the specified format Capabilities audiogen can create a wide range of sounds based on text prompts, from simple ambient noise to more complex sound effects. For example, you could use it to generate the sound of a babbling brook, a thunderstorm, or even the roar of a lion. The model's ability to generate diverse and realistic-sounding audio makes it a useful tool for tasks like audio production, sound design, and even voice user interface development. What can I use it for? audiogen could be used in a variety of projects that require audio generation, such as video game sound effects, podcast or audiobook background music, or even sound design for augmented reality or virtual reality applications. The model's versatility and ease of use make it a valuable tool for creators and developers working in these and other audio-related fields. Things to try One interesting aspect of audiogen is its ability to generate sounds that are both realistic and evocative. By crafting prompts that tap into specific emotions or sensations, users can explore the model's potential to create immersive audio experiences. For example, you could try generating the sound of a cozy fireplace or the peaceful ambiance of a forest, and then incorporate these sounds into a multimedia project or relaxation app.

Updated Invalid Date

whisper

7.6K

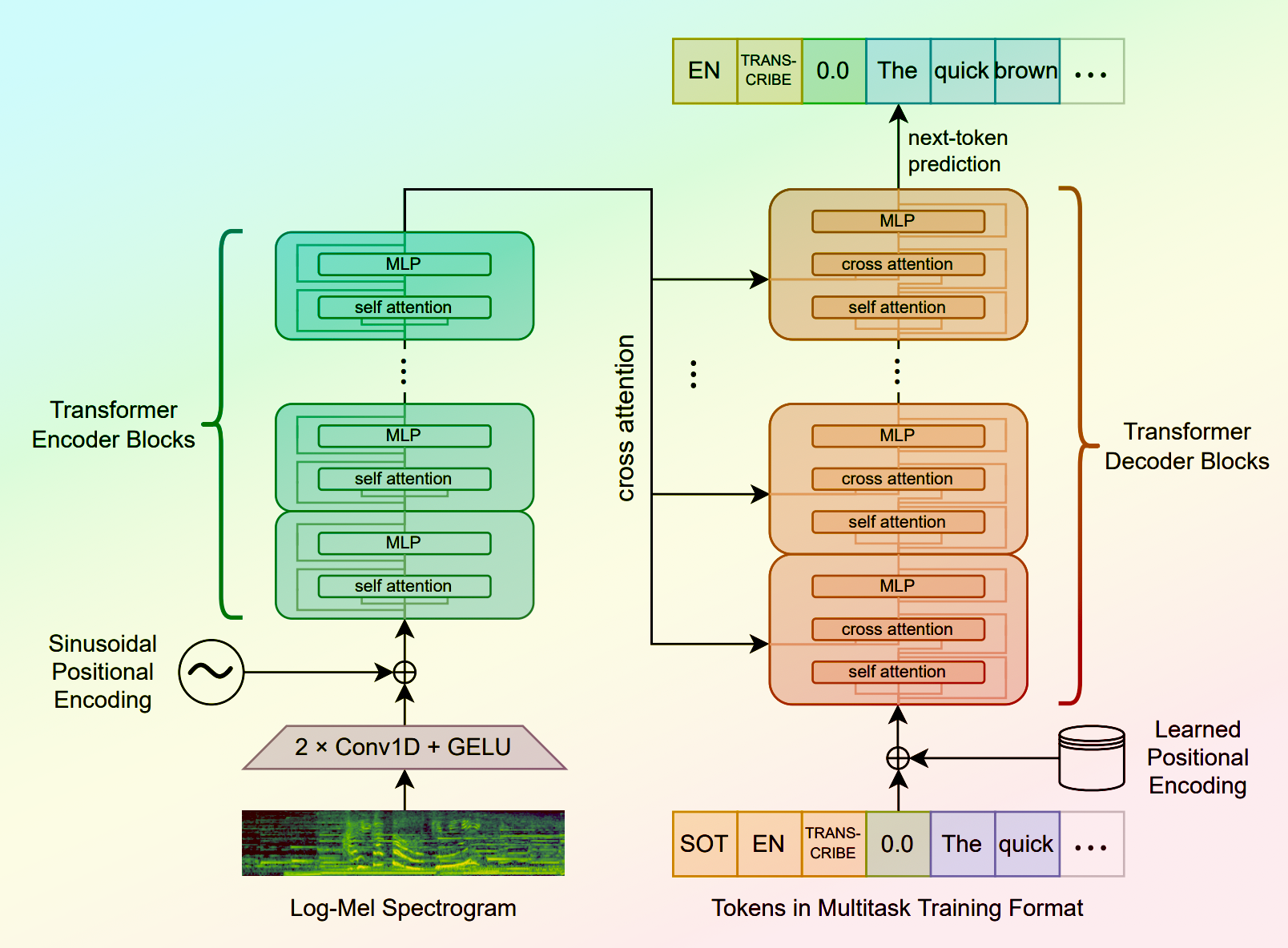

Whisper is a general-purpose speech recognition model developed by OpenAI. It is capable of converting speech in audio to text, with the ability to translate the text to English if desired. Whisper is based on a large Transformer model trained on a diverse dataset of multilingual and multitask speech recognition data. This allows the model to handle a wide range of accents, background noises, and languages. Similar models like whisper-large-v3, incredibly-fast-whisper, and whisper-diarization offer various optimizations and additional features built on top of the core Whisper model. Model inputs and outputs Whisper takes an audio file as input and outputs a text transcription. The model can also translate the transcription to English if desired. The input audio can be in various formats, and the model supports a range of parameters to fine-tune the transcription, such as temperature, patience, and language. Inputs Audio**: The audio file to be transcribed Model**: The specific version of the Whisper model to use, currently only large-v3 is supported Language**: The language spoken in the audio, or None to perform language detection Translate**: A boolean flag to translate the transcription to English Transcription**: The format for the transcription output, such as "plain text" Initial Prompt**: An optional initial text prompt to provide to the model Suppress Tokens**: A list of token IDs to suppress during sampling Logprob Threshold**: The minimum average log probability threshold for a successful transcription No Speech Threshold**: The threshold for considering a segment as silence Condition on Previous Text**: Whether to provide the previous output as a prompt for the next window Compression Ratio Threshold**: The maximum compression ratio threshold for a successful transcription Temperature Increment on Fallback**: The temperature increase when the decoding fails to meet the specified thresholds Outputs Transcription**: The text transcription of the input audio Language**: The detected language of the audio (if language input is None) Tokens**: The token IDs corresponding to the transcription Timestamp**: The start and end timestamps for each word in the transcription Confidence**: The confidence score for each word in the transcription Capabilities Whisper is a powerful speech recognition model that can handle a wide range of accents, background noises, and languages. The model is capable of accurately transcribing audio and optionally translating the transcription to English. This makes Whisper useful for a variety of applications, such as real-time captioning, meeting transcription, and audio-to-text conversion. What can I use it for? Whisper can be used in various applications that require speech-to-text conversion, such as: Captioning and Subtitling**: Automatically generate captions or subtitles for videos, improving accessibility for viewers. Meeting Transcription**: Transcribe audio recordings of meetings, interviews, or conferences for easy review and sharing. Podcast Transcription**: Convert audio podcasts to text, making the content more searchable and accessible. Language Translation**: Transcribe audio in one language and translate the text to another, enabling cross-language communication. Voice Interfaces**: Integrate Whisper into voice-controlled applications, such as virtual assistants or smart home devices. Things to try One interesting aspect of Whisper is its ability to handle a wide range of languages and accents. You can experiment with the model's performance on audio samples in different languages or with various background noises to see how it handles different real-world scenarios. Additionally, you can explore the impact of the different input parameters, such as temperature, patience, and language detection, on the transcription quality and accuracy.

Updated Invalid Date